Overview

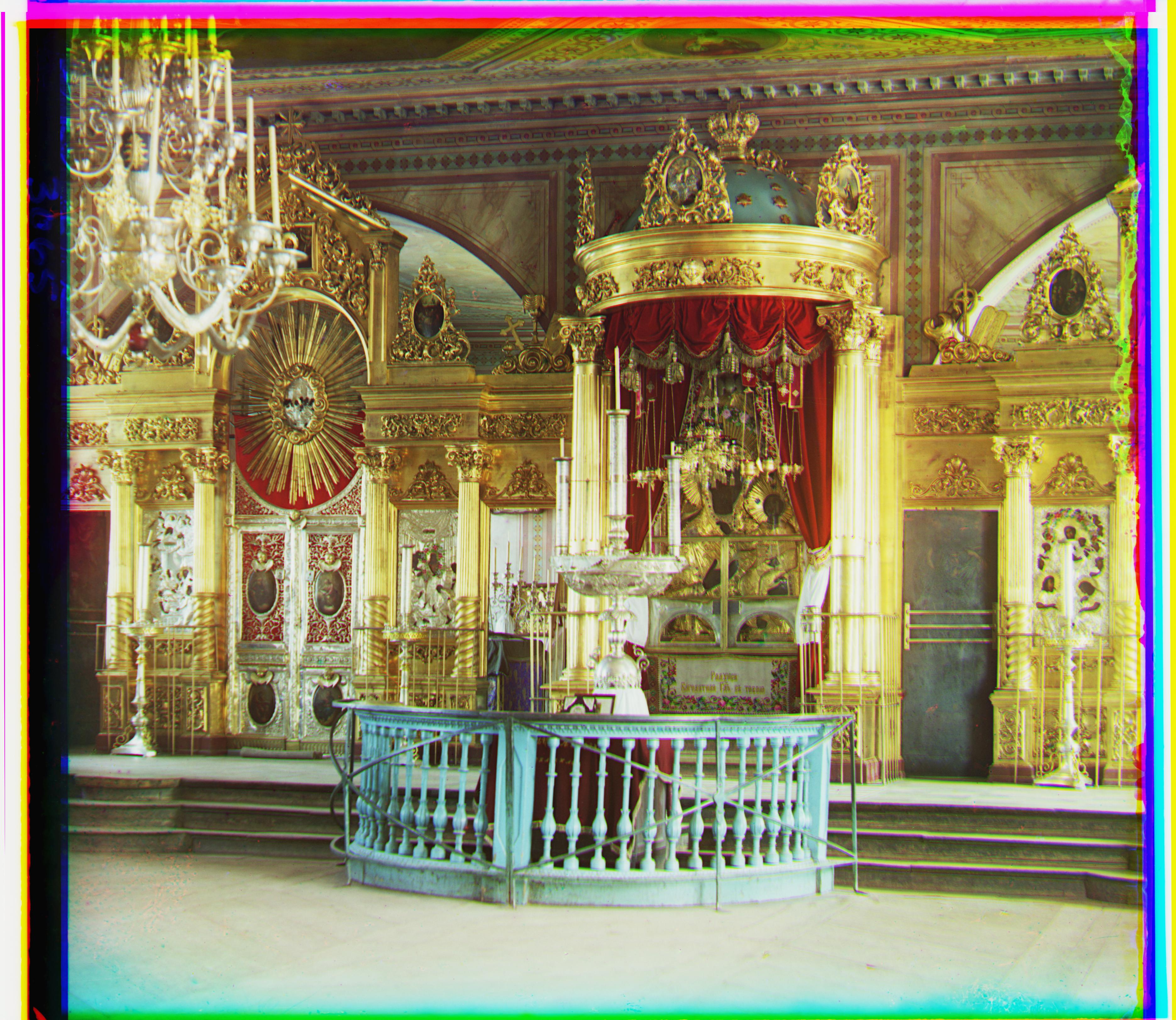

Sergei Mikhailovich Prokudin-Gorskii in 1907 anticipated the future of color photography by taking 3 photos per scene with a red, green, and blue filter over a black and white camera. These photos were bought by the Library of Congress, and this project aims to combine and colorize these photos as Gorskii envisioned.

Approach

I implemented the basic methods for aligning images (the naive approach of using trial and error to find offsets between images) as well as an image-pyramid approach for larger files that first scales down the image to a base case slightly larger than 32 by 32 pixels, find the offset vector through trial and error, and recursively iterate through larger versions of the image (multiplying the scale by 2 as well as the previous displacement vector) to finally arrive at a displacement for the largest image.

For a metric for how well aligned the images are, I used the L2 norm between the images and also implemented Normalized Cross-Correlation (NCC) for images that do not work well with the L2 norm comparison.

An extra feature I implemented that vastly improved the quality of the colorized image was using Canny edge detection to transform the images and align the images based off the transformed images. The Canny edge detector first applies a filter derived from a Gaussian function to smooth the image and reduce the influence of noise. Next, the intensity of the gradient at each pixel is calculated to find areas where intensity changes sharply. To refine the results, the algorithm trims down potential edges to single-pixel lines by discarding gradient magnitude values that are not local maxima. Lastly, it uses hysteresis thresholding to decide which remaining pixels truly form part of an edge based on their gradient strength. I expected NCC to work the best for these since they would compare edges directly, but actually the L2 norm turned out to work the best for the majority of photos in the dataset (probably because canny edge detection is a bit messy and sigma and the hysteresis thresholds would need to be finetuned for each image for NCC to work well). I've also implemented parallel threading in my code to speed up the image generation process from ~45 min for all photos to ~24 min for all photos.

Images are separated by method, with a comparison of techniques at the bottom. The blue image was taken to be an offset of (0,0), and displacement vectors are listed as (vertical, horizontal) pointing down and to the right of the top left corner for positive displacements.

Extra images I've chosen are marked with *asterisks*!

Naive L2 Norm with Edge Detection

Images are generated by trial and error of displacement vectors of RGB layers transformed by the Canny edge detector, comparing the L2 norm between them.

Naive Normalized Cross-Correlation (NCC) with Edge Detection

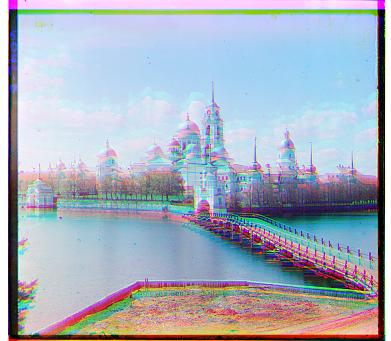

Likewise trial and error of displacement vectors, but over layers transformed with Canny edge detection. Possibly due to the low resolution and light colors, monastery seems to work best only with NCC and edge detection as a comparison metric.

Image-Pyramid Edge Detection L2 Norm

Generated images are with an image-pyramid approach transformed using Canny edge detection with sigma=1.5 and sweeping over 50 pixel displacements per layer. Comparisons between layers are done using the L2 norm. Lady required sigma=0 to be aligned properly, possibly due to the soft colors with lack of contrast making the gradients smaller and below the hysteresis threshold.

Image-Pyramid L2 Norm

Image-pyramid approach sweeping over 50 pixel displacements per layer. Comparisons between layers are done using the L2 norm. Edge detections seems to have a hard time with aligning the melon image precisely (although it is within several pixels). After inspecting the Canny transformed images, I believe this is because there is a lot more blue contrast in the image to determine edges, and not as much green and red contrast. This makes it slightly more difficult to align green and red images precisely onto the blue.

Edge Detection vs. No Edge Detection

Images transformed with Canny Edge Detection generally do a lot better on a couple of the images than the non-edge-detection counterparts, and around equal on others. Below are some examples.